Nuclear Weapons | Selected Article

This is Not a Drill

Lessons from the Hawaii False Missile Alert

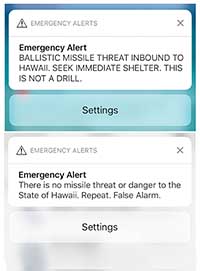

A little over one year ago, the Hawaii Emergency Management Agency sent out a blaring alert to untold thousands of people in the Hawaiian Islands: BALLISTIC MISSILE THREAT INBOUND TO HAWAII. SEEK IMMEDIATE SHELTER. THIS IS NOT A DRILL.

This was, it can feel difficult to remember, a time that followed a period of high tension between the United States and North Korea, when leaders of both countries were bragging about their nuclear capabilities and the possibility of war seemed very real.

It was, as it indicated, not a drill. But it wasn’t a real sign of an attack. Though the exact circumstances are somewhat conflicted and unclear even today, it is clear that a Hawaii Emergency Management Agency (HI-EMA) employee—whether because he really believed that an attack was inbound or because he was disgruntled or otherwise confused by the interface—sent the signal in what was quickly realized to be an error. Despite the magnitude of the warning and the possible effects of sending such a thing to so many people trying to live through their Saturday mornings as usual, it took considerable time for a retraction to be sent out on the same system.

Reuters/Hugh Gentry

In the immediate aftermath of the warning, experts—including myself—opined on the meaning of the alert. Most pointed to this incident as a sign of our renuclearized times and falling in line with the legacy of false alarms in the Cold War. Some asked what would have happened if, for example, the president of the United States had gotten the same alert and acted on it, or if it had come at a time of higher international tension. And many remarked on the fact that the most common sentiment from those exposed to the message was fatalistic ignorance: they didn’t know what to do and they didn’t know whether there was anything they could do if the alert had been real.

As a scholar of nuclear issues, I flew to Honolulu last January to take part in a workshop, co-organized by Atomic Reporters and the Stanley [Center], titled “This Is Not a Drill,” which brought together experts with a large number of journalists to talk about the false alarm and its lessons, with the hindsight of a year.

From my perspective as a nonjournalist, the discussion was instructive, especially as it brought together old veterans of the trade with the up-and-coming journalists whose reference point for the industry was very different. All agreed that journalism, in this age of dense networks and fast communication, had an important role to play when it came to processing events such as the Hawaii alert, as well as helping the public know whether to take such things seriously. It was a local Hawaiian news station that first reported that the alert was false, well ahead of official sources.

Personally, much of the value from the experience came from hearing the stories that the residents of Hawaii told about their experiences of the alert. Some of these were formally delivered, such as when we visited the East-West Center and talked to staff and faculty there. Some of it was informal, such as a Lyft driver who took me back to the hotel after a dinner out, and the taxi driver who took me back to the airport. While those encounters are not a scientifically rigorous selection, they do illustrate some of the responses to the alert, aside from the now-famous footage of a father trying to stuff his children down a manhole—which is probably an exceptional response.

My Lyft driver looked like she was probably in her twenties and had lived in Hawaii most of her life. She told me she didn’t think the alert was real because if it was, they’d also sound the tsunami-alert sirens—an interesting assumption. The taxi driver who took me to the airport, by comparison, believed the alert was real (“it was on the TV!”) but was, at least in his recollection, more sanguine about it: “I was sitting in my kitchen, and I had finished a cup of coffee. I thought, ‘I should not have more coffee.’ But then I saw the alert, and I thought, ‘I can have one more cup of coffee.’”

These are only two examples, but they are perhaps revealing. Research has shown that perception of the likelihood of war is part of what leads someone to take an alert like this seriously and that younger people tend to rate the risk of war lower than those who are older. And historical research on false alarms has indicated that the ability to confirm an alert through another source is also important. In this case, the younger woman felt the alert was not confirmed, because of the lack of the tsunami siren, while the older man considered that it was confirmed since he saw it on the television as well. Of course, any recollections are going to suffer from memory biases so far after the fact, but they do align well with what we know about human behavior more generally.

I recently had the chance to review a study of false air-raid alarms from 1960, which looked at how the residents of three American cities responded to unscheduled air-raid alerts in the late 1950s. The circumstances of the three false alerts were very different: in Oakland, bombers were actively spotted offshore and could not be immediately identified, but it was eventually discovered they were American; in Washington, DC, a wire was literally crossed by the phone company, activating the alarm in the middle of the workday; in Chicago, in an ill-conceived attempt to celebrate the White Sox winning the American League pennant for the first time in 40 years, the fire commissioner ran the sirens late in the evening. But in all cases there was no way for the citizens of the cities to know they weren’t false.

Kyodo News/Getty Images

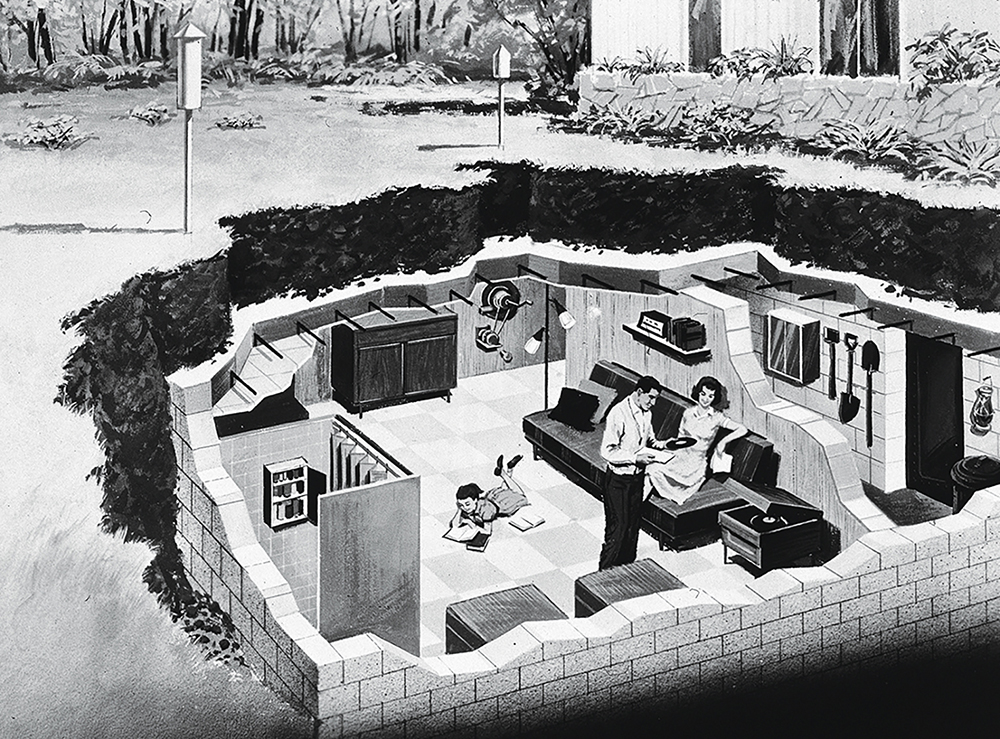

Perhaps contrary to our modern expectations, even in the immediate glare of Sputnik, most of those exposed to the alerts did not take them seriously. Even among those who did think they might be indications of a real attack (about a quarter of those surveyed), few actually did anything about it—like take protective action. And those were people who, by and large, had been told what to do: Civil Defense actually existed as a public program, public shelters were marked and well maintained, and some of these people were actually well trained with respect to what they were supposed to do (notably those in DC and Oakland).

The exact reason for nonaction could not be easily identified, but in general, women took the alerts more seriously than men (especially if they had children), and those who were of a “medium” education level (high school but no college) took the alerts more seriously than either the “undereducated” (no high school) or “overeducated” (college). The study authors concluded that those of a higher social class tended to disbelieve that all of their accomplishments could come crashing down around them in an instant, while those of lower social classes tended to be more ignorant about what to do. Overall, those who believed that nuclear war was possible in the short term were more inclined to take the alerts seriously than those who did not.

As with Hawaii, one of the first actions that people pursued was to attempt to confirm or disprove the alert. That meant turning on televisions if people were home or trying to locate radios. It also meant using telephones to confirm with emergency officials, something that also occurred in Hawaii—many people called 911. As with Hawaii, these confirmation attempts could fail: newscasters often did not know more than the average person in the first minutes following the alert (minutes that could mean life or death were the attack real), and the excessive strain on the telephone service clogged the lines (which for some seemed to confirm the alert as real).

We don’t have as good data on what people did after the Hawaii alert as we might wish we did; we do not even seem to know how many people got the alert—overall, it appears this was a missed opportunity to study American attitudes toward a nuclear war. The reportage seems to indicate that many of those who got the alert took it seriously, or immediately turned to other sources of confirmation, especially social media, to see whether they should take it seriously. It also indicates that many people, perhaps unlike in the most tense period of the Cold War, had no real sense of what they ought to do or whether they could do anything. (The official recommendation is to “shelter in place”; getting inside, preferably a large building or basement, can mitigate the effects of a nuclear explosion, assuming it does not occur directly above you.)

Hulton Archive/Getty Images

All of this points to interesting perils and promises of social media and ubiquitous smartphones. The false alert was, of course, enabled by these technologies—a possible peril. But many of those in Hawaii, especially younger residents, reported turning to their smartphones for assistance, both in looking up what to do and in trying to confirm or disprove the alert. This new connectivity, far broader than the television and radio of the 1950s, points to future directions for thinking about alerts, real or not.

Social media is a double-edged sword here: never before have so many people been able to instantly communicate with one another. If what they communicate is falsehoods, then a world of falsity results. If what they communicate is truth, then we get a world of truth. In the moments that might determine life versus death, correct action versus wrong, panic versus confidence, having better mechanisms in place for credibly communicating whether a given scare is worth taking seriously and, if it is, what ought to be done could save effort and maybe even lives. The news is no longer a single, one-directional form of information: on social media, we all participate in the curation and even creation of the news of our day. Again, this holds promise and peril, but either way, it is the world we live in, and it complicates older, one-directional models—“here’s what you need to know, here’s what you need to do”—of both risk communication and emergency management that still dominate official approaches.

Unfortunately, the main result of the Hawaii false alarm does not seem to have been a positive one. Aside from the frustrations, anger, and perhaps even medical consequences (as one lawsuit alleges, the plaintiff claims the alert triggered a heart attack) of the false alert, there was another result: HI-EMA has canceled its ballistic missile alert program out of fear that another false positive would do too much reputational damage. While the dangers of a false positive should be taken seriously—at a minimum, they desensitize people to real alerts; at a maximum, they cause unnecessary fear, panic, and perhaps even real physical harm—we do live in a world where the risk that a ballistic missile attack exists is not zero. Wishing the threat away doesn’t help, and neither does the false impression that “there is nothing you can do.” Americans ignore nuclear risks, and nuclear alerts, at their peril.

Alex Wellerstein is a historian of nuclear weapons at the Stevens Institute of Technology, a coprincipal investigator on the Reinventing Civil Defense Project (funded by the Carnegie Corporation of New York), and the creator of the online nuclear weapons effects NUKEMAP. His work has appeared in the Washington Post, Harper’s Magazine, the New Yorker, and other outlets. He blogs at blog.nuclearsecrecy.com.

In January 2019, the Stanley Center and Atomic Reporters hosted the four-day “This Is Not a Drill” journalism workshop in Hawaii, just ahead of the one-year anniversary of the false ballistic missile alert that occurred there. Workshop participants revisited that incident and explored new dimensions of nuclear risk in the digital age.

This article was written for the Stanley Center and we encourage others to share its important message, with attribution.