Nuclear Weapons | Selected Article

Ethics in the Age of OSINT Innocence

The pace at which technologies emerge and evolve often outstrips the pace at which institutions and bureaucracies can respond. These technologies could pose opportunities or risks for avoiding the use of nuclear weapons.

DownloadThe increased availability and lower cost of satellite imagery has made it accessible to civil society in recent years. While universities, think tanks, and nongovernmental organizations are racing ahead to incorporate this form of open source intelligence (OSINT) into their regular research work, there are a number of unexamined areas that our team at the Open Nuclear Network (ONN) wanted to explore. Are open source analysts facing ethical dilemmas? If they are, how are they resolved? What resources exist to support them to make such decisions?

Difficult Decisions

In 2017, North Korea released a series of photos of a silver, round device—a purported nuclear warhead. Melissa was gripped with the desire to understand everything in the photo. On the one hand, she wanted to determine if the silver orb was credible. Had North Korea now demonstrated a device small and light enough to put on the tip of not one but several of its missiles? Using the missile in the background, Kim Jong Un’s height, and photos of the inside of the building from several angles, she made some realistic guesses on the size of the object and closely examined each wire, hexagon, and pentagon on the surface.

After Melissa got over how much she was able to learn, she became worried about how much she should share about weapons design. She chose not to publish her measurements or her analysis of the object in comparison to other images of warheads. Even today, both of us grapple with the weightier dilemmas of this case. Given that we want the public to understand and make good decisions about nuclear weapons, how do we weigh (1) proliferating information that could enable the design of future weapons, and (2) lending credibility to a propaganda campaign that threatened the region?

Thus, ONN joined the Stanley Center for Peace and Security to embark on a joint project to better understand the landscape of ethics in the field of open source informational analysis of nuclear weapons. As researchers ourselves, we constantly face small, medium, and large dilemmas. Weighing proof and privacy is just one example.

(Reuters)

Appetite for Guidance

Our mission at our new organization is to reduce the risk of the use of nuclear weapons in response to error, uncertainty, or misdirection, particularly in the context of escalating conflict. This requires us to not only be accurate but trustworthy as well. We cannot hope to have a positive impact without building ethical best practices from the start. We are conscious of how questions about ethics are intricately linked with power dynamics. This is particularly true in a field dealing with complex national and international security issues, (big) data analytics, and mass media. We believe that such power should be guided by an adaptive body of community norms, best practices, and collaborative peer review.

To this end, ONN and the Stanley Center convened a workshop in Boulder, Colorado, that brought together representatives of major research institutions in the field, individual consultants, journalists, and representatives of satellite companies. We sought balanced representation, thus identifying our first red flag. Open source geospatial analysis is primarily driven by North America and Europe, with little representation from Asia, Africa, or South America.

As we convened the meeting with the facilitation of the Markkula Center, it was apparent that the attendees were all eager to participate. We had anticipated some competitiveness and insularity in the group, but everyone came with an open mind, and many came with a list of concerns that they had already put together. Even those who could not attend sent input and feedback. The publication of The Gray Spectrum: Ethical Decision Making with Geospatial and Open Source Analysis in January 2020 led to even more input, making it clear there is a hunger for resources such as frameworks and peer-consultations on ethical decision making.

While the workshop was held under the Chatham House Rule to facilitate more-open dialogue, we made several overarching observations we can share. First, analysts worry a great deal about the consequences of their work. In addition to their desire to positively contribute to international security, they also feel pressure to always be accurate, fast, and newsworthy. These pressures can pull them in different directions. Second, there are almost no resources for them to consult, and what few there are generally target journalists, human rights activists, and/or scientists. Finally, while participants recognized that poor ethical decision making by one could affect the reputation of the whole, few had the time or financial resources to develop the resources, procedures, or interorganizational peer reviews they wanted.

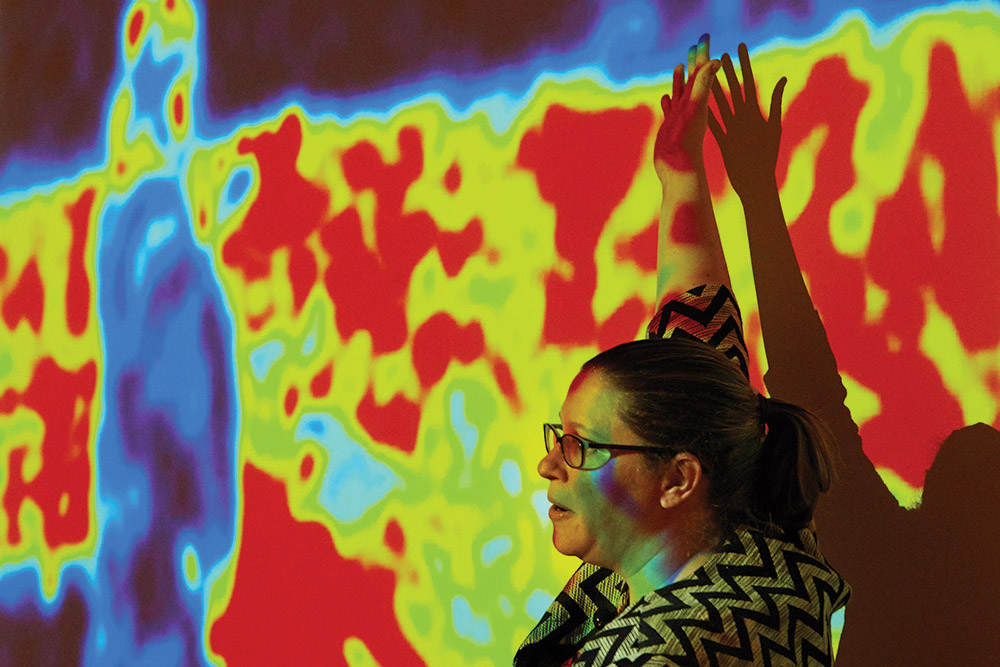

Photo courtesy Middlebury Institute’s James Martin Center for Nonproliferation Studies

An Environmental Approach

ONN’s own code of ethics immensely benefited from the collaborative effort at the joint workshop with the Stanley Center and the broader community.[i] Many of the existing frameworks[ii] rely on either agentic responsibility ethics (process and impact focused) or rights-based patient evaluation (consent and harm focused), whereas the digital and data-centric nature of our work requires some combination of the two. This comes closest to what has been called an “environmental approach to the digital divide” in academic literature[iii] and puts the focus on assuming responsibility over an informational environment as the moral patient of ethical conduct.

While the appeal to ethics in research is typically perceived to be a conservative act, in hindrance to scientific progress,[iv] we argue that explicit ethical standards and codes can contribute to ultimately more-accurate and legitimate intelligence. Credibility is of primary importance to independent nongovernmental actors operating in the open source field, and being able to demonstrate that there is a clear concern over possible dilemmas and deliberate processes in place to navigate them is a powerful demonstration of an organization’s commitment to its declared mission.

In our view, an ideal code of ethics should start from an internal translation of an organization’s values and mission to principles guiding the more-specific quandaries that analysts—particularly analysts from different backgrounds—face throughout the stages of the research process. Am I putting an individual’s security at risk? Am I biased in a way that significantly undermines the independence of my analysis? Am I providing enough context so as not to misrepresent or oversimplify developments?

Photo by @planetlabs

Collaborating within the Community

We suggest investing appropriate time and collaborative space in developing any code to make the process as inclusive and nonhierarchical as feasible. This can then naturally result in a consensus decision to adopt a code by all staff, which is important for internal and external reasons. We wish to assure the public of our intentions while giving analysts safe haven for creativity in their work before the arduous task of vetting for publication. Such a code should not, however, be intended to serve as an “ethical checklist” ensuring that analysts will no longer have to deal with the case-by-case complexity of ethical decision making. Organizations should expect commonly used notions, such as reasonable expectation and harm, to be interpreted in more-contextually and culturally appropriate ways through continued practice and regular self-reflection, both individually and as a team.

ONN’s own code relies on internal processes, ensuring that there is at all times a consideration for weighing social good and possible harm, independence, accountability, and transparency. Primary responsibilities of paramount importance include the principle to serve the global good, and to uphold transparency, accuracy, and independence. Analysts shall defend freedom of and respect for information, and factual information ought to be distinguished from commentary, criticism, and advocacy.

Accompanying our code, we will provide training and resources to our staff not only for technical but for ethical capacity building as well. We have begun training on structural analytical techniques that have been shown to reduce the risk of biases and mistaken assumptions. ONN will continue to train with other frameworks, such as the one introduced by the Markkula Center.[v] Even as we seek to improve the speed of our analysis, we do not want to reduce accuracy or risk harm. ONN is also beginning to organize a structured process of internal peer review and red teaming with outside consultation of trusted third parties. This method will allow us to test and validate our analyses before publishing. We are preparing processes for handling differences of opinions within our team, and for the quick, public, and rigorous correction of any errors in our work.

Ultimately, the broader community of open source analysts still needs toolkits. We hope our experience and collaboration with the Stanley Center leads to a strong foundation for the community to identify what is needed next. These resources cost time and money, and the foundations and governments that fund civil society should take heed that they need to invest in these ethical capabilities in addition to technical ones. We must remember that neither the legal access to information nor the technical capability to interpret it translates into an ethical justification to publish. Like doctors, we must first do no harm.

Melissa Hanham is the Deputy Director of Open Nuclear Network (ONN), a program of One Earth Future, and also directs its Datayo Project. She is an expert on open source intelligence, incorporating satellite and aerial imagery and other remote sensing data, large data sets, social media, 3D modeling, and GIS mapping for her research on North Korea and China’s weapons of mass destruction and delivery devices.

Jaewoo Shin is an Analyst for ONN, where he focuses on developments on the Korean Peninsula and Northeast Asia, with particular attention to nuclear risk reduction and regional nuclear and missile programs. He has a special focus on text analysis to understand related trends.

As part of our work in the field, the Stanley Center partnered with Open Nuclear Network as a leader in the geospatial and open source analysis communities to explore how ethics could help govern open source intelligence and safely extend the critical contributions it makes to creating a safer world.

References

[i] In addition, we embraced existing work by the Society of Professional Journalists, the International Federation of Journalists, the Responsible Data movement, and the Berkeley Center for Human Rights, while identifying where the specificities of our field require adaptations.

[ii] See Gray Spectrum.

[iii] Luciano Floridi, “Information Ethics: An Environmental Approach to the Digital Divide,” Philosophy in the Contemporary World 9, no. 1 (2002): 39-45.

[iv] Effy Vayena, and John Tasioulas, “The Dynamics of Big Data and Human Rights: The Case of Scientific Research,” Philosophical Transactions of the Royal Society A 374, no. 2083 (2016): 20.

[v] See Gray Spectrum.

Related Publications

Nuclear Weapons

The Militarization of Artificial IntelligenceNuclear Weapons

Ethics in the Age of OSINT InnocenceRelated Events

June 27-29, 2023

Exploratory Workshop on Auditing Risk ReductionSeptember 26-28, 2022

Emerging Technologies and the Nuclear Nonproliferation TreatyApril 29, 2020

Three Tweets To Midnight – Virtual Discussion